Executives keep circling back to the same question: “Does this agentic AI stuff actually work beyond the demos?” There is no clean yes-or-no answer, and that uncertainty says a lot about where enterprise agentic AI adoption really stands in 2026.

The Reality Behind the Adoption Numbers

On paper, adoption looks impressive. MIT reports that 95% of organizations deploying enterprise AI fail to deliver measurable business value after more than a year in production. Budgets get approved, shiny stacks are assembled, and a year later the scoreboard still shows impressive pilots and thin impact.

McKinsey adds another layer to this picture. Around 88% of companies now use AI in some form, yet only 6% qualify as “AI high performers” generating more than 5% EBIT improvement and meaningful qualitative value. The activity is widespread; the AI ROI is concentrated among a small group that figured out what others are missing.

What worries boards and operators alike is that the gap between those two groups is not narrowing. Every new wave of agentic AI pilots, launched without fixing underlying operational issues, quietly widens the distance between experimenters and compounding performers.

Why Agentic AI Deployments Stall After the Demo

Across industries, the same pattern shows up in enterprise agentic AI implementations. Organizations are asking AI agents to operate in environments where the company itself doesn’t properly understand how work actually gets done. Processes live in outdated documentation, tribal knowledge, and local shortcuts that never make it into the design of the agent.

When an AI agent is dropped into that mess, it does exactly what it was asked to do—just not what the business really needs. The result is familiar: expensive pilots that never graduate to production, technically successful proofs of concept that don’t move cost, cycle time, or customer experience, and teams quietly burning out on AI initiatives long before the value arrives.

Over the last wave of agentic AI projects, four fault lines have emerged that separate the small set of organizations turning AI agents into leverage from everyone else.

Four Fault Lines That Decide Who Wins

1. Process Intelligence vs Process Ambiguity

Why Most AI Agents Operate Blind

High performers have done the unglamorous work of seeing their operations clearly. They leverage process intelligence platforms to use real execution data and understand how work flows across systems, teams, geographies, and edge cases, instead of relying on idealized process maps or PowerPoint diagrams.

Most organizations are not there. Roughly 82% lack AI‑ready enterprise data and the process intelligence needed to train and operate AI agents with confidence. In that environment, every agentic AI deployment is guesswork. Agents are pointed at processes that look coherent on paper but behave very differently in practice, and the gulf between design and reality shows up as failure modes no model can magically fix.

How Process Intelligence Changes AI Agent Success Rates

Process intelligence platforms reveal three dimensions that static documentation cannot capture: how work actually happens across dozens of variants, where exceptions consistently break workflows, and how decisions are truly constrained in practice versus policy. This operational understanding grounds AI agents in reality rather than assumptions.

2. Workflow Redesign vs Bolt‑On Automation

The Real Source of AI ROI

Some organizations treat enterprise agentic AI as a chance to rethink how work should flow end‑to‑end. They strip processes back to first principles: which decisions genuinely need humans, which tasks can run in parallel, where exceptions should surface, and how AI agents and people share responsibility over time. When this kind of redesign happens, it is not unusual to see 60–90% faster cycle times and up to 80% of routine decisions automated.

Others simply graft AI agents onto existing workflows, swapping out a human step for an AI step and calling it transformation. Those agentic AI projects typically plateau at 20–40% efficiency gains, and even those improvements often fail to travel cleanly to the P&L because the underlying process remains fragmented and fragile.

3. Business Outcomes vs Technical Metrics

Measuring What Actually Drives AI ROI

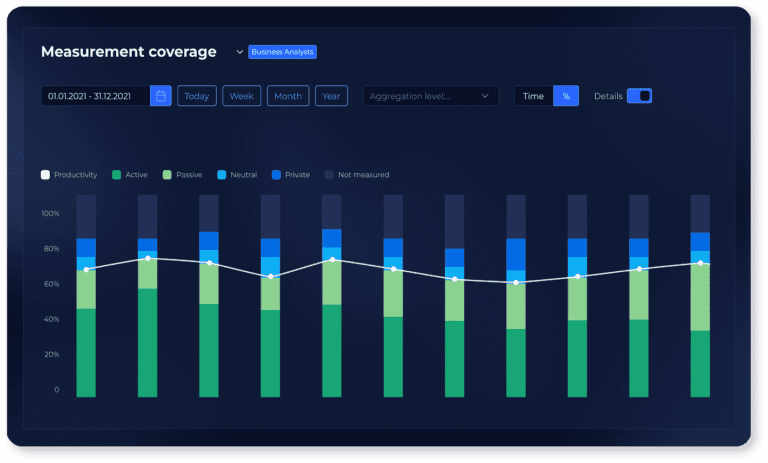

The organizations pulling away from the pack measure agentic AI success in business terms. They track metrics like revenue per process cycle, cost per transaction, and the impact on customer satisfaction, and they link AI agent behavior directly to these outcomes. This is how real AI ROI gets proven and defended.

In contrast, many teams still optimize for technical comfort metrics. Dashboards celebrate numbers like “tasks automated per day” or “average handle time reduction,” but no one can clearly show how those improvements show up in EBIT or working capital. This is one of the reasons 60% of organizations report operational improvements but struggle to realize material financial returns despite significant agentic AI investment.

4. Governance and Visibility vs Black‑Box Agents

Building Trust in AI Agent Decisions

Where enterprise agentic AI deployments work, governance is not an afterthought. Leaders insist on line of sight into how AI agents behave: audit trails, explanations for decisions, clear links to business rules and compliance constraints. That transparency makes it possible to debug, adjust, and scale AI agent implementations without fear.

Where deployments stall, AI agents behave like black boxes. Around 45% of organizations cite lack of visibility into agent decisions as a barrier to scaling, and many encounter “technically correct” actions that still violate policy or damage the customer experience because the agent never had access to the real constraints people apply instinctively.

The 2026–2028 Window: Why Timing Now Matters for Enterprise Agentic AI

Only about 17% of organizations have put agentic AI into production so far, but that is changing fast. Gartner’s latest survey shows that 64% of technology leaders plan to deploy agentic AI within the next 12–24 months.

The calendar matters for AI ROI timelines. A company that begins serious agentic AI deployment now will have months of operational learning under its belt by mid‑2026 and will be refining second‑ and third‑generation AI agents by late 2027. By then, slower movers will still be debating frameworks or running their first controlled pilots.

The value at stake is not abstract. For a business with $10B in revenue, a 5% EBIT improvement driven by well‑designed, grounded enterprise agentic AI translates into roughly $500M in annual value. The organizations that reach that level of AI ROI first create a flywheel: the returns fund further investment, attract better talent, and generate proprietary operational data that makes every new AI agent cheaper, faster, and more accurate to deploy.

Late entrants face a different slope in their agentic AI journey. They rush through steeper learning curves, compete for scarce expertise, and must catch up with rivals that already know which processes are worth automating with AI agents, which guardrails matter, and which pitfalls to avoid.

The Foundation That Actually Moves the Needle

Many teams still anchor their enterprise agentic AI strategy around model choices, architecture diagrams, and vendor roadmaps. Those questions matter, but they are not what separates organizations compounding AI ROI from those trapped in pilot theater.

The real differentiator is operational readiness: how well the business understands its own work, and how far that understanding has been turned into something AI agents can actually use. The companies starting to pull away have invested in process intelligence platforms that deliver continuous, data‑driven insight into real workflows, including the variants, workarounds, and edge cases that never make it into formal documentation.

That process intelligence capability changes the nature of agentic AI entirely. With process intelligence, you are asking an AI agent to move through a well‑mapped landscape, where constraints, handoffs, and goals are visible. Without it, you are asking the agent to improvise in the dark and hoping it stumbles into value before it stumbles into risk.

How to Treat Agentic AI in Strategic Planning

Over the next 12–18 months, the most important decision for enterprise agentic AI is not which model to buy next. It is how you choose to frame AI agents in your strategy: as an experiment in automation, or as a lever for capital allocation and process redesign that drives measurable AI ROI.

Leaders who take the latter view do a few things differently:

- They pick a handful of high‑value processes and ask where cycle time, decision quality, and cost‑to‑serve could shift meaningfully through agentic AI, not just marginally.

- They invest in process intelligence platforms to map how those processes really run today, including exceptions and informal workarounds, before putting an AI agent anywhere near them.

- They start building semantic models of the business—explicit representations of roles, rules, thresholds, and decision paths—that no vendor can package and no competitor can easily copy once established.

This approach to enterprise agentic AI is less glamorous than a new demo, but it builds something far more durable: a body of operational understanding captured through process intelligence that can be reused across AI agents, teams, and use cases.

Common Questions About Enterprise Agentic AI Implementation

What percentage of AI deployments actually achieve ROI?

Only 6% of organizations achieve high-performer status with 5% or more EBIT improvement from AI initiatives. The gap between widespread adoption (88%) and meaningful AI ROI (6%) highlights the operational readiness problem most enterprises face.

What is the main reason agentic AI pilots fail to scale?

Organizations deploy AI agents into processes they don’t fully understand, lacking the process intelligence and operational visibility needed for production success. Without this foundation, even sophisticated AI agents operate blind.

How does process intelligence improve AI agent performance?

Process intelligence platforms capture how work actually flows, where exceptions occur, and how decisions are constrained in practice. This operational context grounds AI agents in reality, dramatically reducing failures and improving AI ROI by ensuring agents optimize real workflows rather than assumptions.

What’s the timeline for achieving meaningful AI ROI with agentic AI?

Organizations deploying enterprise agentic AI in early 2026 will have 18-24 months of operational learning by late 2027, positioning them to achieve sustainable AI ROI while competitors are still running pilots. Early experience compounds through better data, refined governance, and institutional knowledge.

The Decision Point for 2026

The window is open right now for enterprise agentic AI. Organizations that begin serious deployment and grounding work this year will have 18–24 months of lived experience with AI agents by late 2027. That kind of experience cannot be bought off the shelf or compressed into a single program.

Every leadership team faces a simple, uncomfortable choice about their agentic AI strategy. Use 2026 to build the foundations – process intelligence, governance frameworks, and semantic models of how your business actually runs – or watch others do it and negotiate from behind later.

The future will belong to organizations whose AI agents operate in alignment with operational reality rather than in isolation from it. Real AI ROI comes from agents grounded in process intelligence, not just deployed with impressive models. The work to make that possible starts long before the next demo.

Want the complete roadmap for enterprise agentic AI success?

Our comprehensive white paper explores all four critical phases: from understanding the inflection point to implementing governance frameworks that scale.

Download “Why AI Agents Keep Failing: The Operational Readiness Gap” to access the full strategic playbook, including ROI frameworks, process redesign methodologies, and expert insights from industry leaders.

Discover Your Productivity Potential – Book a Demo Today

Book Demo